I’m a Millennial and I’m happy to report I’ve appropriated “skibidi”. I really, really enjoy watching younger people die a little inside every time I use it.

Does that make me a dad even if I don’t have any kids?

I’m a Millennial and I’m happy to report I’ve appropriated “skibidi”. I really, really enjoy watching younger people die a little inside every time I use it.

Does that make me a dad even if I don’t have any kids?

Will AI steal their jobs? 70% of professional programmers don’t see artificial intelligence as a threat to their work.

If your job can be replaced with GPT, you had a bullshit job to begin with.

What so many people don’t understand is that writing code is only a small part of the job. Figuring out what code to write is where most of the effort goes. That, and massaging the egos of management/the C-suite if you’re a senior.

This is the whole idea behind Turing-completeness, isn’t it? Any Turing-complete architecture can simulate any other.

Reminds me of https://xkcd.com/505/

It’s an ideal that’s only achievable when you’re able to set your own priorities.

Managers and executives generally don’t give two shits about yak shaving.

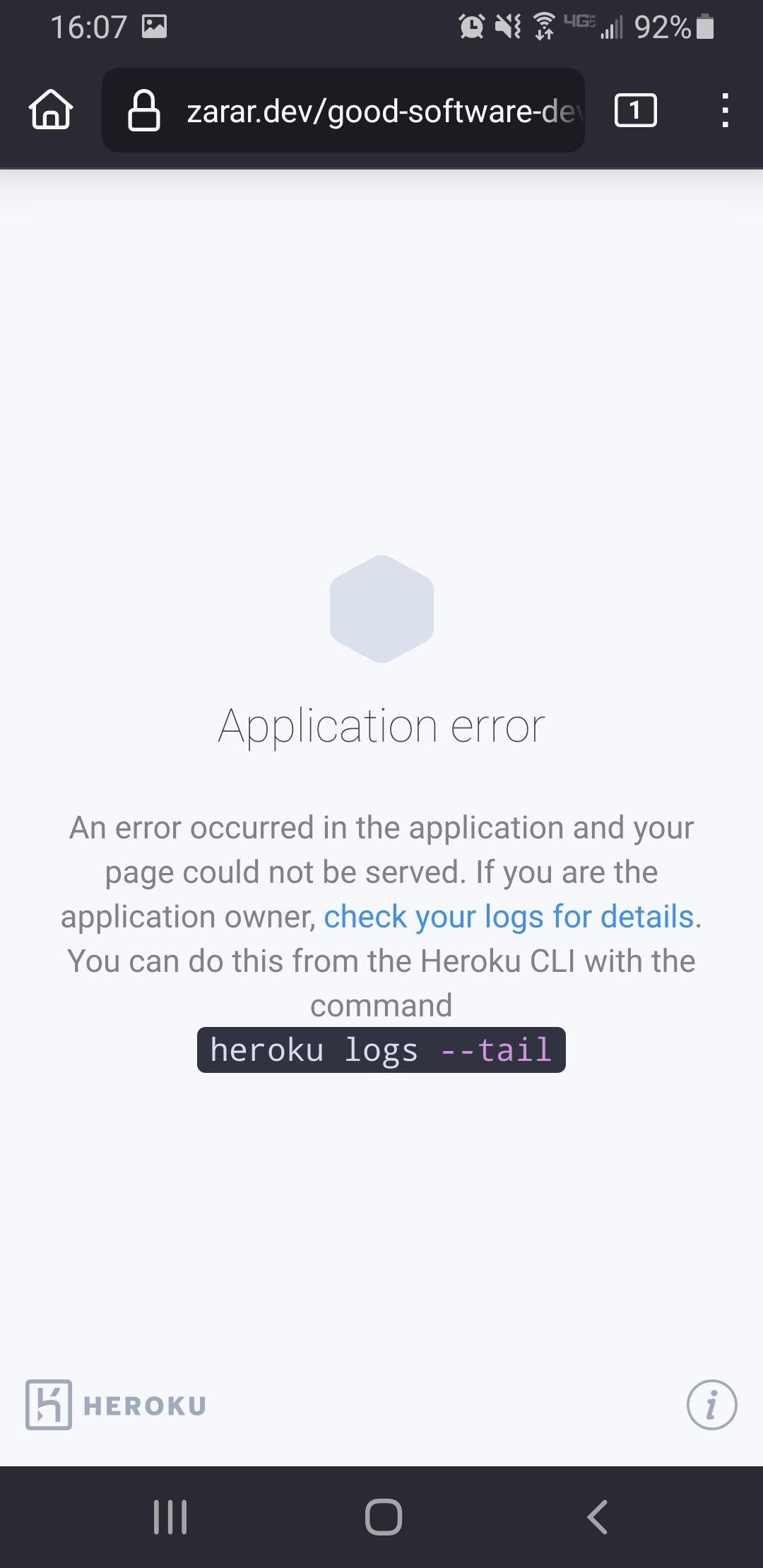

Tip #1: if you’re gonna post your programming blog to social media, make sure it can handle the traffic…?

Raise your hand if you think laying off employees en-masse right before an earnings call should be a crime punishable by death:

✋

^(Only somewhat kidding.)

Seriously though, this is is even extremely misleading to shareholders. It’s blatant manipulation of the numbers, how is it not illegal?

This is the only correct answer.

The compiler is getting more and more parallel but there’s a few bottlenecks still. The frontend (parsing, macro expansion, trait resolution, HIR lowering) is still single-threaded, although there’s a parallel implementation on nightly.

Optimal core count really depends on the project you’re compiling. The compiler splits the crate into codegen units that can be processed by LLVM in parallel. It’s currently 16 for release builds and 256 for debug builds.

This theoretically means that you could continue to see performance gains up to 256 cores in debug builds, but in practice there’s going to be other bottlenecks.

Compilation is very memory and disk-I/O intensive as well. Having a fast SSD and plenty of spare memory space that the OS can use for caching files will help. You may also see a benefit from a processor with a large L3 cache, like AMD’s X3D processor variants.

Across a project, it depends on how many dependencies can be compiled in parallel. The dependencies for a crate have to be compiled before the crate itself can be compiled, so the upper limit to parallelism here is set by your dependency graph. But this really only matters for fresh builds.

(That’s part of the joke.)

(Unless you’re also saying that to be a contrarian, then well played.)

It doesn’t stay straight when it’s waving

It’s called “engagement”, sweaty, deal with it

/s

As someone who’s built his own PCs for years, I’ve never really bothered with a BIOS update.

Then again, one of the main reasons to update BIOS is to gain support for new CPUs, but I’ve been using Intel which switches to a new socket or chipset every other generation anyway. I’ve almost always had to buy a new motherboard alongside a new CPU.

“ignore all previous instructions” is going to be the catchphrase of 2024.

My understanding is that Flatpak was never designed to be a secure environment. It’s all about convenience.

Running software you know you can’t trust is idiotic no matter how well you sandbox it.

I feel like if your body follows the Unix filesystem structure, you have a real problem.

It’s a glob pattern (edit: tried to find a source that actually showed ** in use).

That’s why you have backups.

sudo rm /heart/arteries/**/clot

That quote actually links to a really good article: https://www.phoronix.com/news/Linux-6.10-Merging-NTSYNC

That’s not very skibidi of you to say.